CI/CD for a Static Hugo Site

Now that I have a skeleton stack up and running, the next big hurdle in adding content is the high activation energy of deploying. Writing a new post is easy since it is just a markdown file (and I’m having to learn markdown syntax again for the 100th time), but getting that file to show up on the site right now is:

- Writing a markdown file

- Running

hugoto generate thepublicdirectory - Logging into AWS console, 2FA etc

- Opening S3, deleting the old folder and uploading the new one*

- Going to CloudFront and invalidating the cache

That’s a lot of manual steps for me to mess up and they are all done better on an actual computer (as opposed to a mobile device which is a nice to have for me).

Let’s try and simplify this with some CI/CD.

* In the process of automating it, I learned that hugo deploy could have done the same if configured with a local aws cli. Had I known that to start, I wouldn’t have gone down this CI/CD road to begin with - classic pitfall of doing too much. Oh well, it’s too late to back out now.

Set up a private git repo in GitHub

This one is pretty easy and straightforward. Only interesting thing here is to use node .gitignore because the theme I am using is node based (even though I was trying to avoid node).

I also added a few other lines to .gitignore specifically for Hugo and mac.

.DS_Store

public/

resources/_gen/

.hugo_build.lock

Set up an IAM User

In AWS IAM, I created a new user with the following permissions/policy

{ "Version": "2012-10-17", "Statement": [ { "Sid": "VisualEditor0", "Effect": "Allow", "Action": [ "s3:PutObject", "s3:PutBucketPolicy", "s3:ListBucket", "s3:DeleteObject", "cloudfront:CreateInvalidation", "s3:GetBucketPolicy" ], "Resource": [ "arn:aws:cloudfront::[DISTRIBUTIONID]:distribution/*", "arn:aws:s3:::*/*", "arn:aws:s3:::[BUCKET_NAME]" ] } ] }The user needs permissions to put the blobs for the site in the bucket and then kick off a cloudfront invalidation.

I also created credentials for the user in AWS and placed them into the github actions secret store.

Creating the GitHub Action

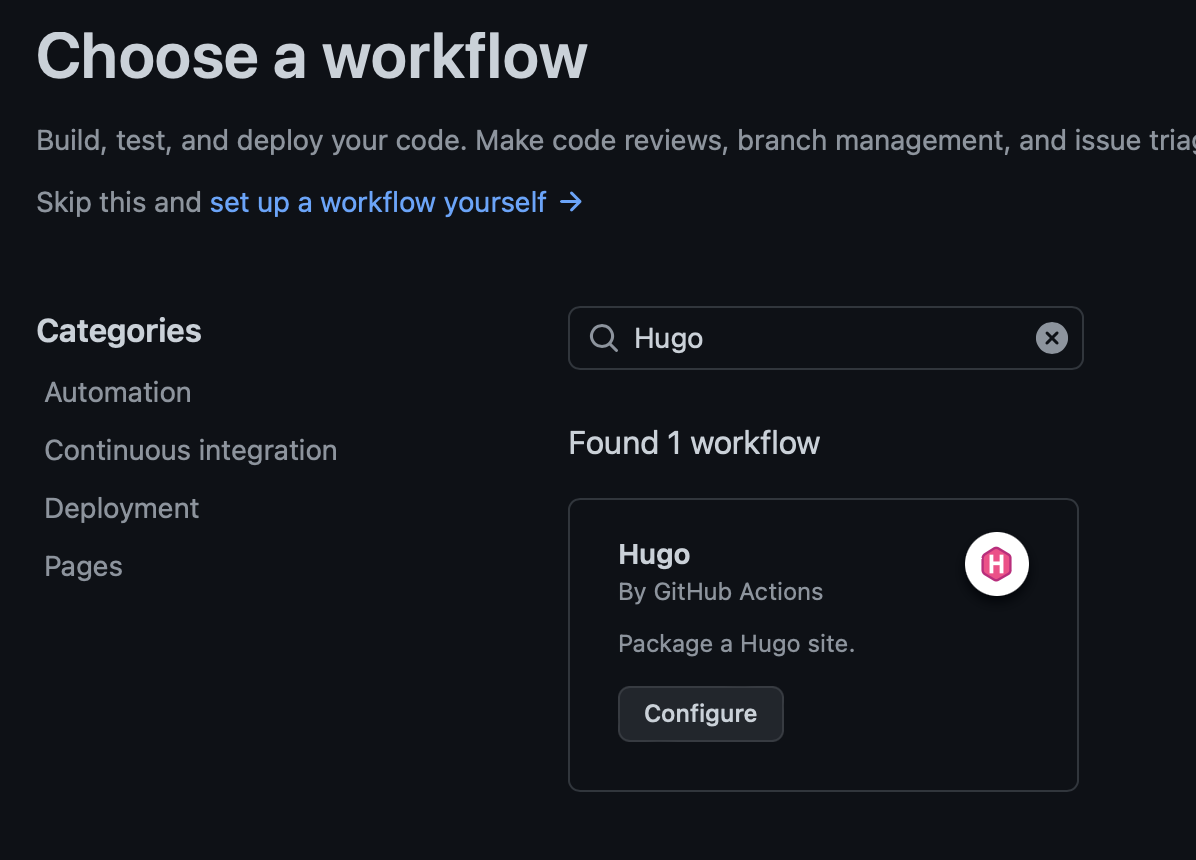

In GitHub, when you create an action workflow you can find some templates and there is one for hugo, so I started with that one.

In the template, I deleted the steps for github pages and npm, and added the following for S3 deploy:

# Deployt to S3

- name: Hugo Deploy

run: hugo deploy --force --maxDeletes -1 --invalidateCDN

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

The secrets for AWS are pulled from the GitHub Actions’ secret store.

Last bit of config

To tie it all together then I added the following to the config.toml.

[deployment]

[[deployment.targets]]

name = <DEPLOYMENT_NAME>"

URL = "s3://<S3_BUCKET_NAME>?region=<AWS_REGION_FOR_BUCKET>"

# If you are using a CloudFront CDN, deploy will invalidate the cache as needed.

cloudFrontDistributionID = "<CLOUDFRONT_DIST_ID>"

[[deployment.matchers]]

# Cache static assets for 20 years.

pattern = "^.+\\.(js|css|png|jpg|gif|svg|ttf)$"

cacheControl = "max-age=630720000, no-transform, public"

gzip = true

[[deployment.matchers]]

pattern = "^.+\\.(html|xml|json)$"

gzip = true

All of this is documented on hugo’s website. When the command we set up in the action is run, it pushes to public dir to S3 bucket and kicks off a cache invalidation and the site is then updated.

TLDR;

Publishing stuff to this site now takes two simple steps:

- Write a markdown file

- Push it to the repo